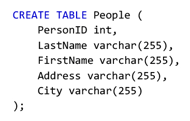

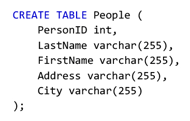

Let's now consider this in the context of Data and Databases. The most typical example of changing the state of a database is creating a table. We would all initially jump to something like:

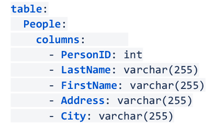

How do can we tell that this is an Imperative approach? The first word is a verb, “CREATE”. What would a declarative approach to the same goal be?

Our database doesn’t speak YML. Like most systems it can only follow Imperative instructions, so we can’t just use this directly. We can, however, pass our Declarative definitions into a compiler, which will also look at the current state and produce an imperative output.

This Declarative approach all sounds like a lot more work, why would we bother with it? It all comes down to state. Consider a case where the initial state is “no People table”:

- The Imperative approach creates a People table with 5 columns

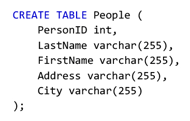

- The Declarative compiler takes the Declarative definition, looks at the current state, determines that there is no table and therefore the best way to turn the current state into the desired state is to execute:

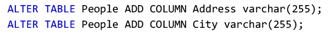

The end result is the same. However, consider the case where the initial state is “People table already exists with only 3 columns (PersonID, LastName, FirstName)”:

- The Imperative approach executes:

- The Declarative compiler takes the Declarative definition, looks at the current state, determines that there is already a table with 3 columns and therefore the best way to turn the current state into the desired state is to execute:

- This works!

The power of the Declarative approach is that, because it can take into account the initial state, it can dynamically create the correct Imperative instructions. Back to the Sherlock Holmes analogy, getting directions from Google Maps™ will work from anywhere because the Imperative instructions it generates are based on my current location—change the start location, and ask for directions and it will generate you a different set.

Put another way, Imperative Instructions are usually based on an assumed initial state and become invalid and inexecutable in any other initial state.

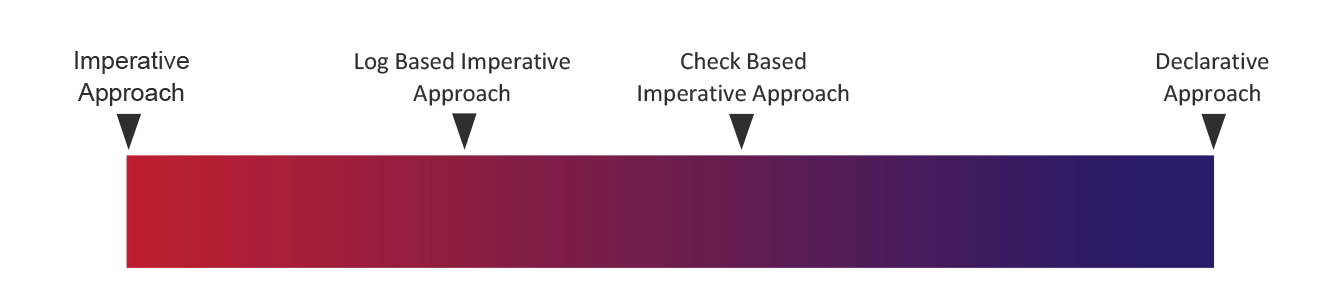

As discussed above these two ‘pure’ approaches are points on a spectrum, with the Log Based and Check Based Imperative approaches being points on that spectrum.

What does this mean today? For the DataOps for Snowflake platform we believe that a full Declarative Approach is the long-term approach for the whole industry. When looking at how other technologies areas (e.g. infrastructure, networking etc) have progressed, these have all followed an inexorable march from Imperative to Declarative over time, but this has taken some time to mature. We do not believe the technologies are quite there today, and we are actively working on projects to make this a reality. However, we believe all use cases today are possible using a set of approaches:

- Use “CREATE OR REPLACE X” where possible (i.e. the target object is stateless, or the state doesn’t matter)

- Use “CREATE X IF NOT EXISTS” where possible

- Use the conditional execute approach (and possibly some convenience wrappers to make specific high frequency use cases even easier)

In the future a Declarative Approach will be a natural evolution of this.

In the next series of blog posts we will look specifically at an implementation approach to Check Based Imperative Approach to Schema Management.